1.Introduction

At present, the number of images and videos on the network is increasing by geometric series.The rapid expansion of the network visual information, the development of society and people’s daily life is having a profound impact.How to organize and manage visual information effectively so that users can quickly check the vast amount of visual information is a problem.In order to solve this problem as well as the issue of being able to find the necessary information, visual information retrieval Image and video than text, voice and other media needs to carry more information.However, other media sources need to be more intuitive and vivid so that users will be more open to using these sources[1-2].Although unstructured degree of image and video is relatively high, its expression also has ambiguity, which brings difficulties in image retrieval and video [3-4].

At present, the main methods used in video annotation can be divided into the following categories:hand annotation, video annotation from several rules and video annotation based on machine learning.The system is easy to implement, but it is time-consuming, laborious and easy to introduce human error.In addition, different people have different standards of judgment, and the differences of these criteria will also affect the consistency of annotation[5].The rule based video annotation method first uses the expert knowledge in a certain field to establish the classification rules, and then the video annotation.A method for aircraft based tagging learning video is based on training samples or video data manually annotated by learning to build all the various semantic conceptual model or conceptual difference model, and then use the model to complete the label of other video data[6].

Sports video annotation has a wide range of applications, but also contains important academic value.It is regarded as a separate subject by many researchers.The core technology is the analysis of the relationship between events and events.The related events are annotated and organized, and the query needs of most users can be satisfied[7].From the academic point of view, event detection and recognition is a typical problem of pattern recognition and computer vision.In sports videos, the event is an order structure target with high level abstract semantics, which is very challenging.The difficulty mainly lies in the fact that video is a multidimensional signal; it has both time and space information, so the data is large and rich in content.

The semantic event in sports video is a dynamic process.It changes with time, so the static target has more complex patterns and variability.There are different definitions in different sports competitions, which are different from their internal logic or causal structure.If we can find a way to solve these problems, and construct a sports video content analysis system, we can provide reference for other similar semantic analysis problems, and then promote the development of the whole video annotation and retrieval.Since the league’s push, Chinese players entering the receiving basketball have become more and more welcomed by the masses.It has also become an important part of people’s lives.It is time-consuming and laborious work to find the video segments of interest in a large amount of basketball video.For the general audience, the point of interest lies in the highlights of the game, and for the field of basketball experts, their point of interest lies in the ability to reflect the players’ skills and team tactics video segment[8-9].

Therefore, it is very important to study the video annotation system of basketball game, which will enable the user to get the video segment.Shot boundary detection is designed to complete shot segmentation.There are two types of boundary mutations and gradients between the shot and the shot.A shot change is the process of switching from one camera to the next.The shot has a process of switching.The key of the shot boundary detection is to distinguish between the camera and the camera and object motion caused by changes in the shot[10].

Motion target detection is foundation to target tracking.The existing methods for detecting moving target include optical flow approach, frame difference method and background subtraction method.Of them, the optical flow approach estimates motion field of image and merges similar motion vector makes a distinction between foreground and background.Frame difference method uses the difference of temporal serial image to realize detection of motion target.Background subtraction solution detects moving target with the combined use of image sequence and background model.Here, we introduce the background subtraction method based on adaptive Gaussian mixed model.

2.Moving Object Detection

2.1.Gaussian Mixed Model (GMM)

Assume $\lambda $is an M-Gauss Gaussian mixed model [11].For a feature vector of $n\times 1$ dimension, its likelihood function $P(x|\lambda )$ is defined as Equation (1).

The function is linear aggregation of M single modal Gauss$p(x)$, where W is weighting coefficient.Each $p(x)$ includes one $n\times 1$ dimensional mean vector ${{\mu }_{i}}$ and $n\times 1$ covariance matrix${{\Sigma }_{i}}$.Equation (2) can be expressed as follows:

From this, Gaussian mixture model can be described with each Gaussian weight, mean vector and covariance matrix, i.e.$\lambda $can express $\lambda =\{{{w}_{i}},{{\mu }_{i}},{{\Sigma }_{i}}\}$ by a triple.

2.2.EM(Expectation Maximization) Algorithm

Set $\lambda $ is initial value of GMM parameter; $\overline{\lambda }$is parameter after optimization of steps.Function Q is constructed as Equation (3).

EM algorithm includes two steps:

(1) In the case of an original given model, estimate function Q to get statistical value; this is called E(Expectation) step.

(2) Optimize function Q to get estimation of new model; this is called M(Maximization) step.$L(\overline{\lambda }|X)\ge L(\lambda |X)$ is satisfied after each iteration.After numerous iterations, model parameter will converge.If iteration repeats too many times, model parameter will approximate too much the space distribution of training data, causing that its ability to describe testing data space distribution becomes bad, i.e.over-training.Generally after 4-5 iterations, the ideal resultwill be achieve.

2.3.Moving Target Detection Method based on Adaptive Gaussian Mixture Model

We utilize adaptive Gaussian mixture model to do background modeling and target detection [12].First, determine approximate distribution of playground’s main color;then, fit it with Gaussian mixture model, update model parameter with EM algorithm.The steps are described below.

2.3.1.Creation of Background Model

Set one main color pixel P of playground has value $({{x}_{1}},\cdots ,{{x}_{i}},\cdots ,{{x}_{t}})$ at point $(1-t)$, in which ${{x}_{t}}$ is Hue value; used K Gaussian distributions are used to depict those pixel values.The probability density function of ${{x}_{t}}$ is described in Equation (4).

2.3.2.Moving Target Detection

For observational value ${{x}_{t+1}}$ of pixel $p$ at point $(t+1)$, we can decide its background point or target point infollowing way:

(1) Calculate in order the distance between observational value and each Gaussian distribution until the first Gaussian distribution is found which suffices ${{d}_{t}}<{{T}_{2}}$; ${{T}_{2}}$ empirical value is 2.5.It is shown in Equation (5).

If no Gaussian distribution is found to meet the condition, use a new Gaussian distribution to replace the one with minimum confidence.${{x}_{t+1}}$ is used as mean value of the new distribution, which has bigger covariance and smaller weight.If Gaussian distribution j meeting condition is found, judge whether j belongs to one of g background distributions.If yes, the pixel point is background point; otherwise, it’s target point.

(2) Update parameter of each Gaussian distribution; weight of distribution is updated in the following manner.It is shown in Equation (6).

(3) Each updated Gaussian distribution is placed in descending order per $\tilde{w}/\sigma $ value as to get new reliable background distribution waiting for the detection at next time point.

The detected targets include player, basketball and non-dominant color area.The kick-off circle and foul lane can be recognized and removed because their connecting regional area feature is very noticeable.To complete detection of moving targets like player and basketball, other players and basketball can be detected with target recognition algorithm based on Hu invariant moment.

3.Tracking of Motion Target

3.1.Moving Target Tracking based on Camshift

Tracking themoving target, as one branch of computer visual research, has become an important part in video processing research in recent years.The research results are widely used in man-machine interaction video monitoring sites.Classical tracking methods include template matching, optical flow, filtering method, Mean-shift algorithm based on color histogram feature distribution, Camshift algorithm and particle filter tracking algorithm.

In basketball match video, players have characteristics like:

(1) Big target, obvious color feature;

(2) Moving not faster than basketball;

(3) Occlusion among them.Based on those features, we choose Camshift algorithm to implement tracking of players.

Camshift algorithm (Continuously Apative Mean Shift) is an improved Mean-shift strategy.It regards the chromatic information of tracked target as feature.Firstly, it calculates and processes chromatic information; then, it projects to the next frame image, seeks out the target, updates source image, and analyzes next frame image.Repeat those steps to realize continuous tracking of target.Before each search, it’s required to make initial value of searching window, which is current location and size of moving target.

The searching window of Camshift algorithm lies in nearby area where motion target may appear, reducing the calculation amount of search and thus saving searching time.So, it has good instantaneity.Camshift algorithm searches moving target through color feature matching.Since color information of moving target does not obviously change, it has favorable robustness.Camshift algorithm is an improvement of Mean-shift algorithm.We make a brief introduction of Mean-shift algorithm.

3.1.1.Introduction of Mean-Shift Algorithm

Mean-shift algorithm is currently a popular parameter-free fast matching algorithm.It has merits like small calculation amount, good real-time, modeling with kernel function histogram, insensitive to edge occlusion and uneven background movement.Mean-shift was firstly proposed by Fukun et al.in 1975.It originally means off-set mean vector.With theoretical evolution, its concept changed as well.Mean-shift algorithm is a iterative step.First, calculate shifted mean value of current point and move it to the shift mean point; then, use it as a new starting point and continue moving until acertain condition is met and the iteration completes.

Mean-shift algorithm is a non-parameter kernel density gradient estimation algorithm.It searches targets by obtaining the extreme value of probability distribution through iterations.This algorithm has steps as follows:

(1) Select searching window in the image and window size is s.

(2) Calculate centroid of searching window in the image.

Calculate the zero order matrix.It is shown in Equation (7).

Matrix of x and yis shown in Equation (8).

Search window centroid location.It is shown in Equation (9).

3.1.2.Implementation of Camshift Algorithm

Camshift algorithm is an improved method based on Mean-Shift algorithm.It’s implemented in these ways:

Step 1 Calculate hue histogram of searching area in the current frame image.

Step 2 Calculation of backward projection.

Step 3 Mean-Shift projection.

Step 4 CamShift projection.

(1) CamShift Feature extraction

Camshift algorithm uses day-mark hue histogram in back projection calculation to get the back projection image.Back projection image refers to calculating the hue histogram of source image and then getting hue probability distribution projection image through convertible calculation.The process is described below.

Step 1 Convert source image to HSL color space and get its Hue value.

Step 2 Calculate histogram of Hue value.Suppose the used histogram is N-level, the position of n pixels is defined as $\{{{x}_{i}}\}$.Histogram is defined as $\{{{\overline{q}}_{u}}\}$; define Hue value of pixel whose location is ${{x}_{i}}$ as $h({{x}_{i}})$.We obtain the histogram distribution, as shown in Equation (10).

Step 3 The scale of acquired straight grid box is converted from $[0,\max (q)]$ to[0, 255] by the Equation (11).

Step 4 Substitute the value u of pixel ${{x}_{i}}$ in source image with the resultant ${{p}_{u}}$; deal with image in the same way.The new projection image is obtained.

(2) Target searching of the algorithm

In the above Mean-Shift algorithm, we mentioned how to determine the centroid location of searching window.After the centroid location is found, use it to update new central location.Then, use the updated location for calculation to get a new centroid position; repeat until centroid converges.Until now, the centroid location is position of target center in the image frame; that is, target searching process of Meanshift algorithm.Camshift algorithm’s target searching invokes Meanshift algorithm’s target searching process.

Camshift algorithm employs elliptic region to track target.The long axis, short axis and direction angle of ellipse are calculated through Equation (12)-(14).

Step 1 Compute the two matrix of the target.It is shown in Equation (12).

Step 2 Calculate of process parameters.It is shown in Equation (13).

Step 3 Calculate the long axis of the ellipse, the short axis and the direction angle.It is shown in Equation (14).

(3) Player tracking based on Camshift algorithm

The paper adopts Camshift algorithm to trail players.Player region is obtained through moving target detection and recognition; it’s considered as initial searching window of Camshift algorithm.When choosing color feature, we select only the most noticeable upper part of players (not including head).Then, the improved Camshift algorithm based on background weighted histogram is utilized to track players.It proves that the method has a better tracking effect.

3.2.Motion Target Tracking based on Kalman Filter

3.2.1.Principle of Kalman Filter

Kalman filter is a linear recursive filter.It can make optimal estimation of next system state by relying on system’s previous state sequence.Kalman algorithm mainly includes state equation and observation equation.

State equation.It is shown in Equation (15).

Observation equation.It is shown in Equation (16).

In the paper, we suppose system is a determined one.${{\phi }_{k, k-1}}$ and ${{H}_{k}}$ are known, and${{W}_{k-1}}$ and ${{V}_{k}}$ meet assumption terms and are known.Set ${{P}_{k}}$ is posterior misestimate covariance matrix of ${{x}_{k}}$and${{\hat{x}}_{k}}$; ${{{P}'}_{k}}$ is prior error covariance ${{x}_{k}}$and${{\hat{x}}_{k}}$matrix.Kalman filter calculates in following steps:

Step 1 ${{t}_{0}}$time, ${{\bar{x}}_{k}}$ and${{P}_{0}}$.

Step 2 ${{t}_{k}}$time, the system state prediction Equation (17) is:

Step 3 ${{t}_{k}}$time, the system state update Equation (18) is:

3.2.2.Basketball Tracking based on Kalman Filter

In basketball match videos, changes of basketball movement and camera angle lead to divergent movement in basketball area in the image.Generally, since time interval between adjacent image frames is very short, only speed is enough to indicate the moving tendency of basketball.We use basketball position and speed as moving parameter of basketball; define system state of Kalman filter as${{(x{{s}_{k}},y{{s}_{k}},v{{s}_{k}},v{{y}_{k}})}^{T}}$.In the image, only the target’s position is observed; hence, the observation vector is defined as${{(x{{w}_{k}},y{{w}_{k}})}^{T}}$.

Assume basketball moves at constant speed in unit time interval.Define state shift matrix ${{\phi }_{k, k-1}}$as Equation (19).

The basketball motion estimation algorithm based on Kalman filter is described like:

(1) With basketball position and speed acquired above, we assign initial value to ${{x}_{0}}$ and assign initial value to ${{P}_{0}},\text{ }{{Q}_{0}}$ and ${{R}_{0}}$.When the initial speed is unknown, it’s replaced by 0.

(2) Before processing next frame image, calculate the time interval $\Delta t$ with former frame image and put it into Equation (17).Equation(18)is used to predict current moving state ${{{\hat{x}}'}_{k}}$.In ${{{\hat{x}}'}_{k}}$, detection in certain area $({\hat{x}}'{{s}_{k}},{\hat{y}}'{{s}_{k}})$ is the center and search the optimal matching of basketball mode.If no result is available for full image matching, substitute $(x{{w}_{k}},y{{w}_{k}})$ into state correction equation to get $(\hat{x}{{s}_{k}},\hat{y}{{s}_{k}})$.

(3) Update state of Kalman filter with ${{y}_{k}}={{(x{{w}_{k}},y{{w}_{k}})}^{T}}$ and update speed with Equation (20) and Equation (21).

After Kalman prediction, it’s required to find true position of basketball around the predicted point.We use histogram matching method to guarantee tracking timeliness.We use diamond searching methodfor searching; the predicted point is the center and the region, which is five times bigger than the basketball template as searching area.This replaces the original full image searching.Make template size $M\times N$, the template histogram after normalization is ${{q}_{u}}$, size of matching image is ${M}'\times {N}'$, and the normalized histogram of candidate matching part of size $M\times N$ is ${{p}_{u}}(x, y)$.Similarity Equation(22) is defined as:

In basketball match videos, when the ball is sheltered by player and flies out of playground while being held by player, it’s difficult to accurately detect the ball.At the moment, accurate observation value can’t be acquired and Kalman filter can’t update; thus, Kalman filter can’t track precisely.In this case, we can decide if basketball flies off the court by virtue of information like detected basketball position and speed.If the ball doesn’t fly away, we can tell if it’s occluded or carried as per undetected basketball video frame.According to features of basketball match, if the frame is few, it’s thought that the ball is occluded; if frame is big and uninterrupted, it’s thought that the ball is carried.If the ball is occluded by player, we can do curve fitting with least square method to predict the position of basketball in next frame.This can be used as observation value to update Kalman filter untilthe ball is detected.If the ball is held by a player, we can track player who is closest to predicted point of basketball trajectory function.

4.Experimental Analysis and Results

4.1.Environment of Simulation Experiment

Hardware environment is Inter(R) Pentinum®3.0GHZCPU, 2GM memory.Software environment is WINXP OS, Visual c++6.0, Visual c#2008, OPenCV, Aforge.net.

4.2.Experimental Results and Analysis of Moving Target Detection

In this part, we utilize adaptive Gaussian mixed model for the court pixel modeling.Through the distance between observed value and playground pixel, we can tell observation point belongs to background point or target point.The data for the experiment is chosen from NBA basketball match videos.

4.2.1.Experimental Results and Analysis of Player Tracking

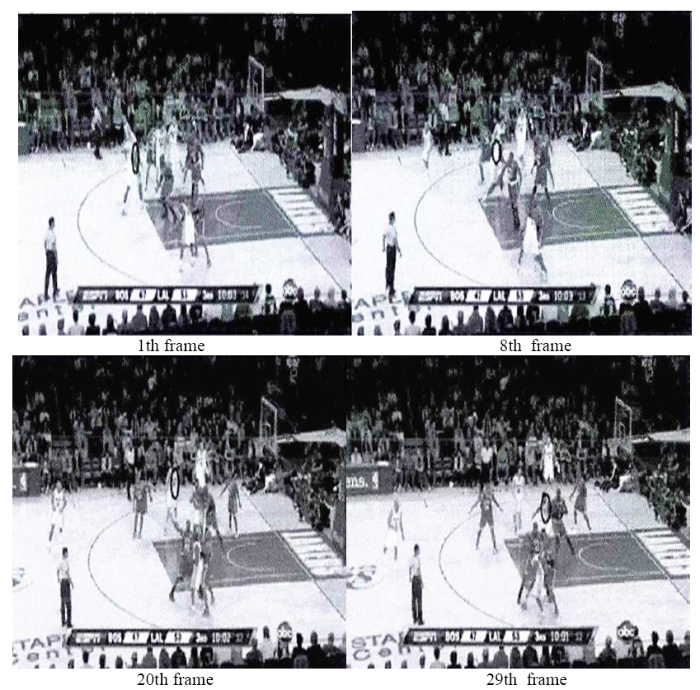

The experimental video is selected from match between Lakers and Celtics.For testing, we choose upper body of one player in Lakers (white) as tracking objective.It is shown in Figure 1.

Figure 1.

Figure 1.

The tracing results of Camshift

From Figure 1, we note that in 20th frame, due to occlusion, Camshift tracked upper part of another player.In the 29th frame when there’s no occlusion, the target has certain variations; but, Camshift tracks correct target.It shows that Camshift has good effect in player tracking.

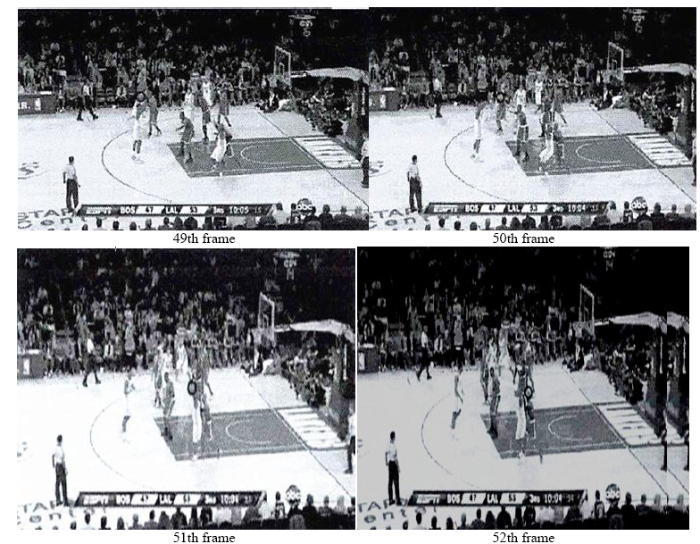

4.2.2.Experimental Results and Analysis of Basketball Tracking

The experimental video is chosen from a match between the Lakers and Celtics.In this paper, we use Kalman filter to trail basketball.It is shown in Figure 2.We find in the 50th frame, the basketball changed direction and speed as a result of external force.Therefore, big gap exists between observation value and true value.However, with basketball moving steadily towards new direction at new velocity, Kalman filter tracks it very well.This is seen in the 51th frame.It suggests that Kalman filter performs better in tracking basketball.

Figure 2.

Figure 2.

The tracing results based on Kalman filter

5.Conclusion

In this paper, we used adaptive Gaussian mixed model to model playground pixel, dividing foreground point and background point.Next, with target recognition method based on H invariant moment, we completed recognition of players and basketball, as well as detection of moving targets like players and basketball.For target tracking, considering features of basketball match videos, we applied Camshift algorithm to track players and the one based on Kalman filter to track basketball.Using adaptive Gauss mixture model to model the stadium pixels, in the video will be the foreground and backgroundpoints to distinguish.In the target tracking, combined with the characteristics of basketball video, using the algorithm to track the players, the use of the filter to track the basketball, and achieved good result.Experiments reveal that the proposed method makes better effect in both tracking and detection.

Reference

“Research on Moving Target Detection and Tracking Algorithms,

”

“Mobile Background under the Research on Moving Object Detection and Tracking Technology, ”

“Research on Moving Object Detection and Tracking in Video Image,

“Research on Moving Target Detection and Tracking Algorithm based on OpenCV

”

“Based on Dynamic Template Matching of Air Moving Target Detection and Tracking Control, ”

“Detection and Tracking of Video Moving Objects based on OpenCV, ”

“An Improved Moving Object Detection and Tracking Method, ”

“Research of Moving Object Detection and Tracking Algorithm, ”

“Analysis of Sports Video, ”

“Player Detection and Tracking in Sports Video, ”

“Sports Video Sequence of Moving Object based on IMM Tracking Algorithm, ”

“Research on the Motion Video Tracking Technology based on Meanshift Algorithm and Color Histogram Algorithm, ”

“Based on Underlying Visual Information of Sports Video Intelligent Analysis, ”

“Research on Moving Target Tracking and Detection Algorithm in Volleyball Video, ”